elcomCMS SEO Checklist - New Site Go-Live

We've been working with elcomCMS for many years now, and helped with a number of site go-lives. In this post I wanted to go through a simple...

Setting the robots.txt file is important for your web site's health - Google uses it as a guide for what to index and what to exclude.

I've previously included references to the robots.txt file in this post on going live with a new elcomCMS site (see point 6) but it's worth covering again here.

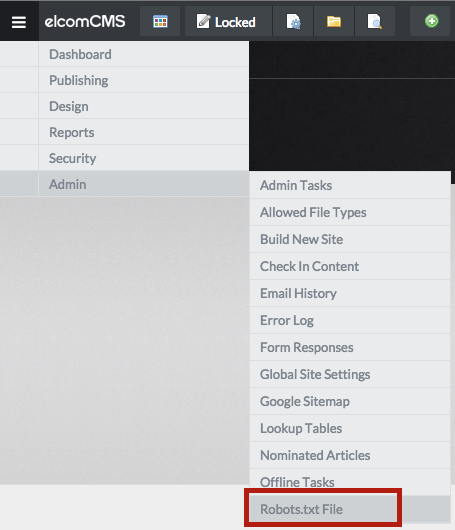

In elcomCMS, setting the robots.txt file is really easy.

You can access it from the Admin menu:

and then edit it directly:

If a site is in staging, you'll ideally want to block Google from crawling it. Use the following to block the entire site:

User-agent: *

Disallow: /

Once the site is live, you only really want to block a few items eg blocking the login page is reasonably common. You can also block entire directories eg such as an FTP folder:

User-agent: *

Disallow: /cmlogin.aspx

Disallow: /ftp/

Be very careful when going live with a site - I often see sites go live (from staging) and keep their robots.txt file from staging - and in the process block their entire live site in Google! Make sure you check your robots.txt file when going live.

We've been working with elcomCMS for many years now, and helped with a number of site go-lives. In this post I wanted to go through a simple...

One of the best ways to guide Google in what it should crawl on your site, is to provide a listing of URLs that you deem important.

The Move to HTTPS Moving web sites from http to https is becoming more common as companies aim to improve security, and take advantage of the slight...